What scares SEOs the most in 2023? What are the new SEO nightmares that worry us and the persisting ones? I had the great opportunity to present about it a few days ago at the Google Search Central Live event in Zurich, and would like to go through some of the top outcomes here too.

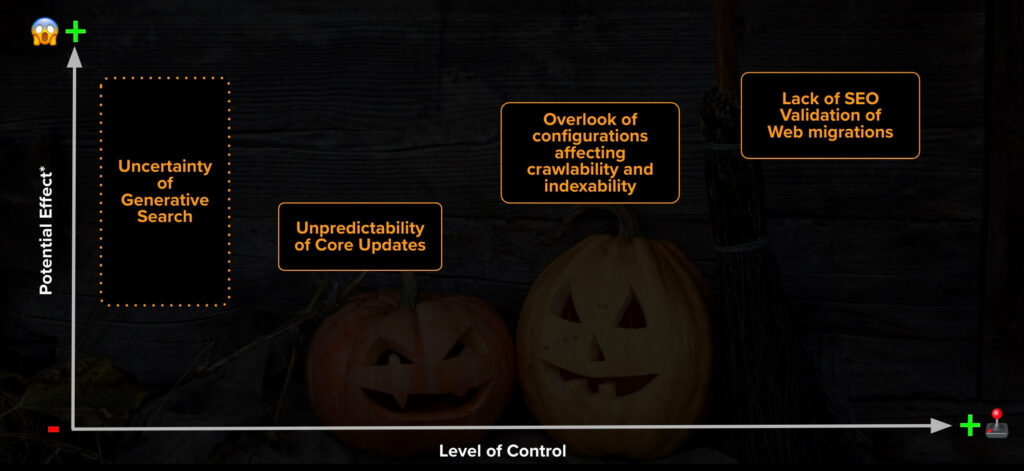

I asked around on social media and got responses that can be categorized into 4 main areas, that have different levels of control as well as potential (negative) effect:

- The uncertainty of Google generative search

- The unpredictability of Google core updates

- Overlooking configurations affecting crawlability and indexability

- Lack of SEO validation of Web migrations

Let’s go through them, and see the best ways to tackle them!

1. The uncertainty of generative search

We’re afraid of losing organic search traffic with the eventual launch of the SGE. It’s important to note that the SGE has gone through many refinements, now with less initial visibility by default and more links included too.

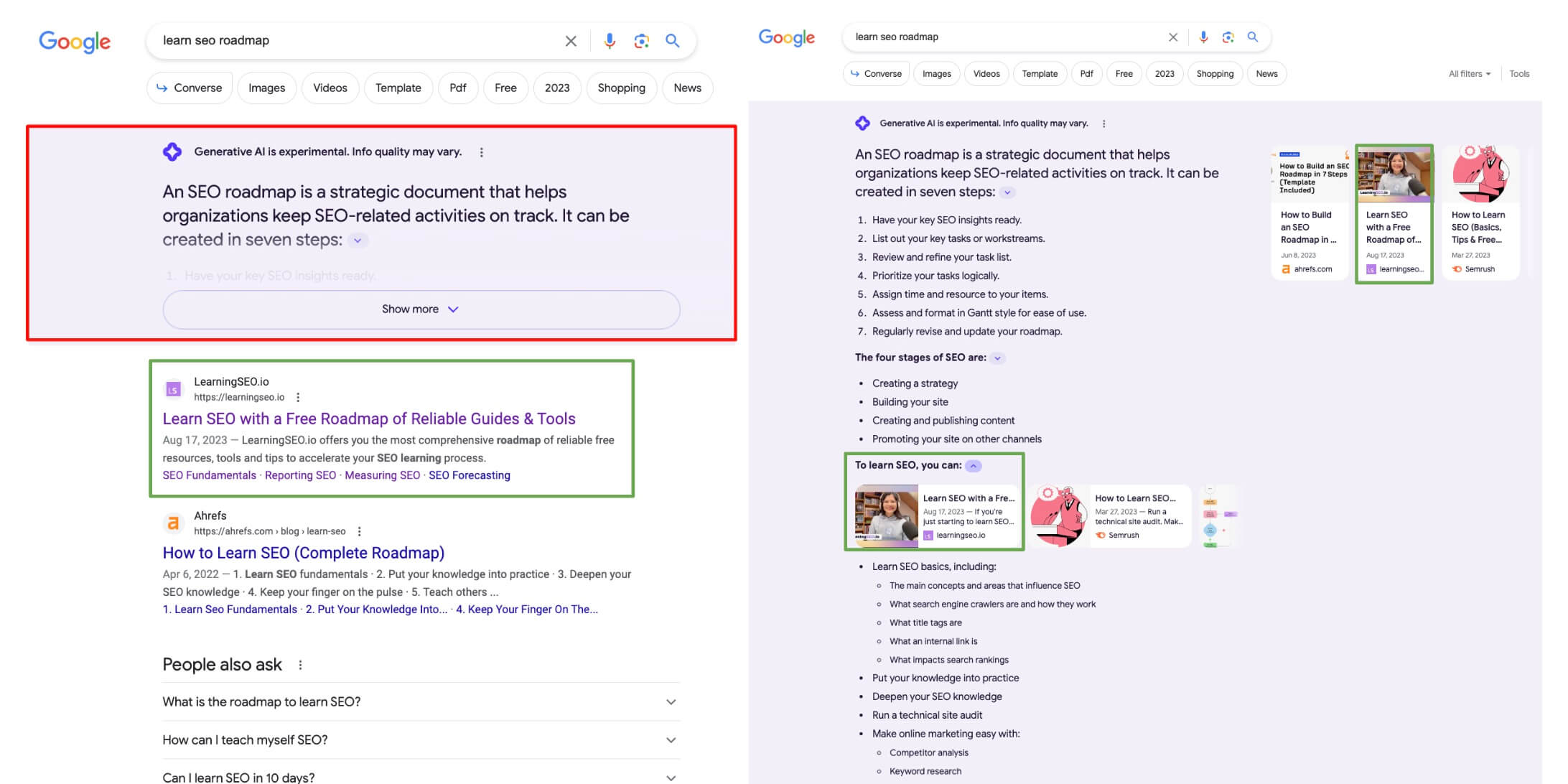

For many queries there isn’t a snapshot included at all, and for others we need to click on a “Get an AI-Powered overview for this search” option to get it at all, or the snapshot is only partially shown with a “show more” option to see it completely.

This also has to do with user satisfaction and Google’s business model: Referring users to sites where they can actually execute what they’re looking for (to buy, reserve, convert with a service, product or information) is at the core of how search engines work and their business model, so there’s certainly an incentive to get it right, which is what Sunday Pichai, Google’s CEO has said they’re focusing on before its release.

However, what it’s more certain, is that some SGE snapshots could shift users to different pages types (and providers, including competitors) during the search journey, by satisfying faster their search intent.

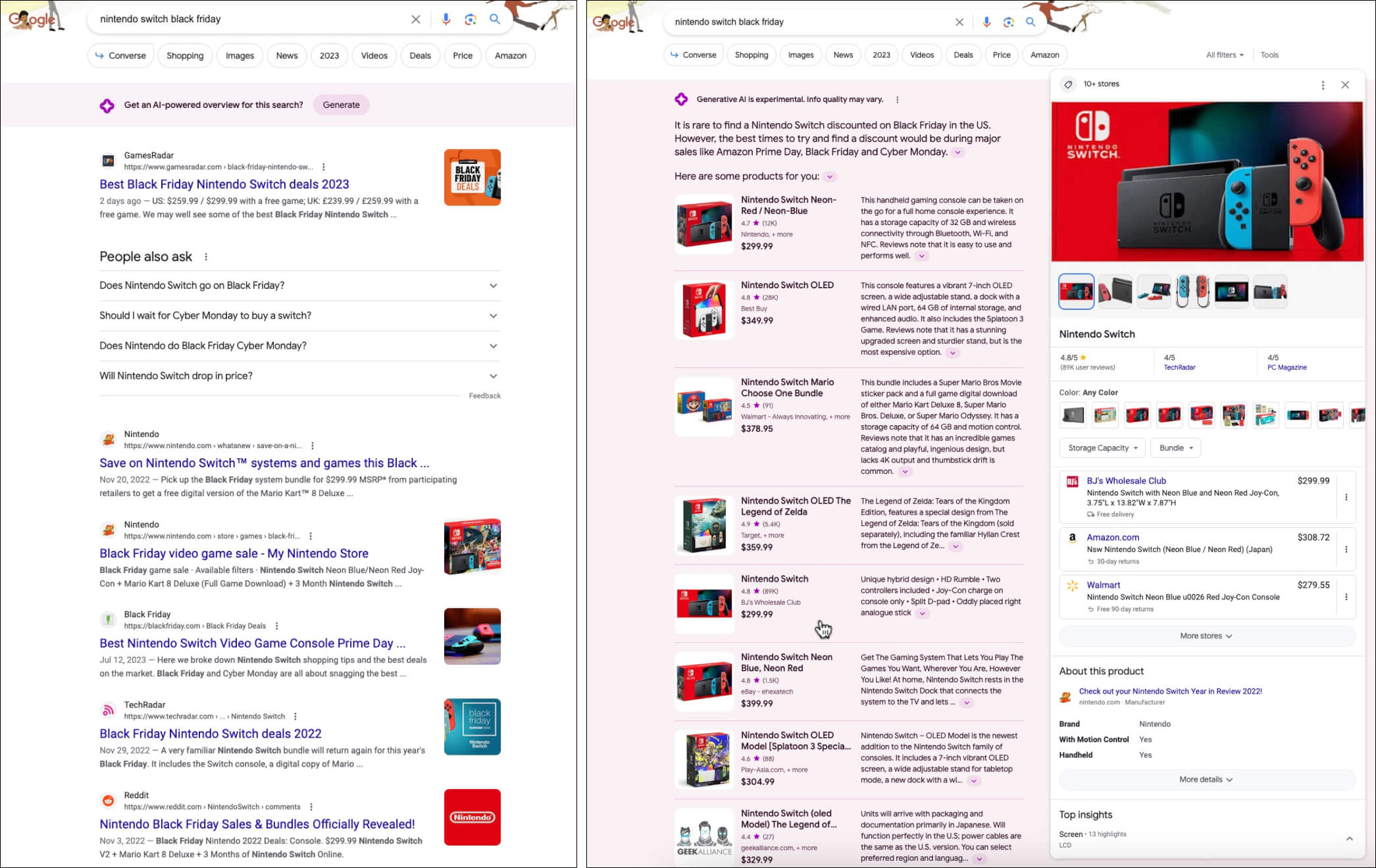

For example, when looking for a “nintendo switch black friday”, for which nintendo switch guides and listings are currently ranking in top organic search results, the SGE snapshot directly generate a list of relevant products along with a brief introduction. These products are clickable and their details are shown through their product knowledge panel, featuring options to buy them through different retailers.

You can see more examples like this in an analysis I did for How Google’s SGE Snapshots Change Top Black Friday and Cyber Monday SERPs.

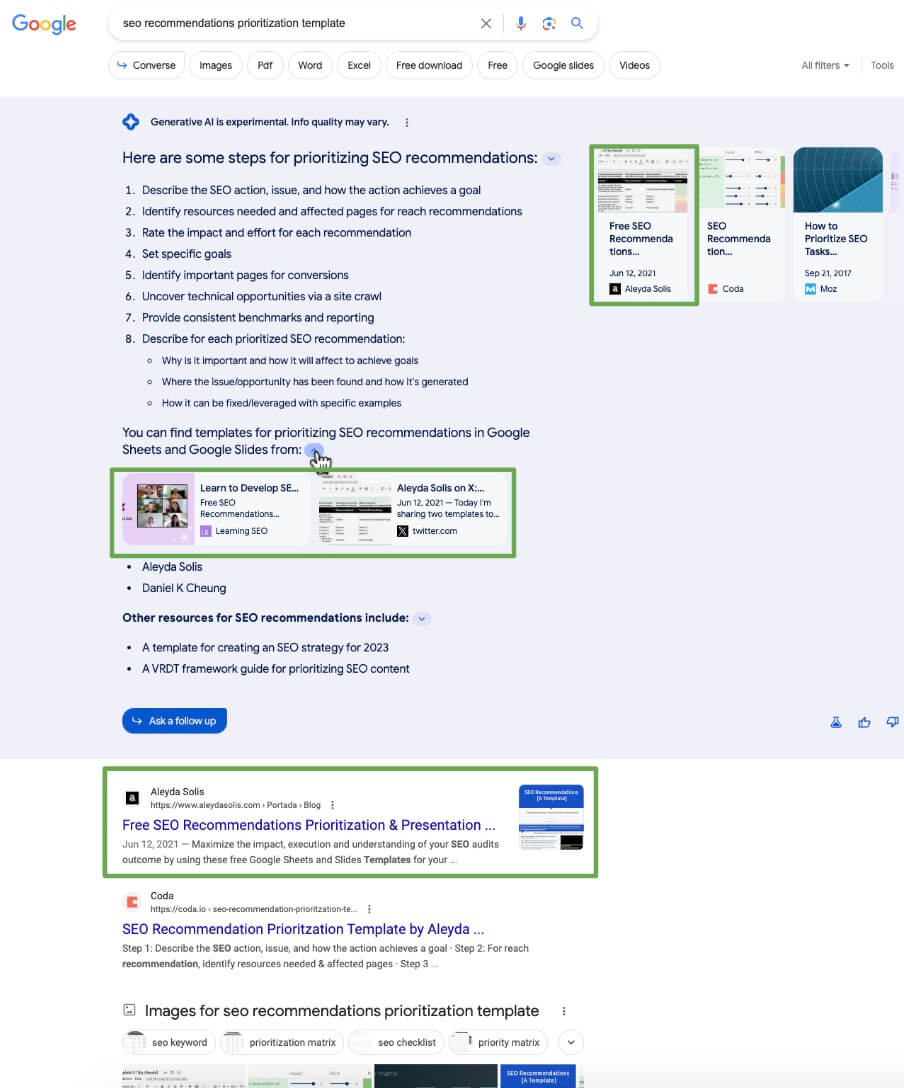

It’s important to note though, how this is not always the case in so many scenarios. For example, when searching for “SEO recommendations prioritization template”, even if the snapshot does a good job summarizing the SEO prioritization steps, someone who wants a template won’t be satisfied with it, and will need to continue clicking whether in the top ranked page from my site, or my site page also listed within the snapshot too.

In general, see 3 different main SGE snapshots types, with different levels of risks:

- Duplicative of organic search results (eg. a local maps pack like snapshot above an actual local maps pack) with trivial impact for the ranked pages traffic.

- Summarizing & complementary of organic search results (eg. a snapshot explaining the steps to prioritize SEO recommendations when looking for a template) that I expect to have trivial impact too.

- Accelerators of search intent, which are the ones that I actually see having a much higher impact on ranked pages traffic, as the snapshot will directly satisfy the intent for what they’re looking. (eg. a search for “nintendo switch black friday” and getting the products already on discount in the SGE, with a way to buy them through retailers, without having to go to the guides or listings ranked in top search results).

What to do about it then, especially this last SGE accelerator scenario?

I would highly advise to verify for yourself how the SGE changes your organic search ranked pages visibility and fulfillment for key queries through your sites search journey to identify risks and opportunities, eg: start creating more/complementary content, improve/optimize those types of pages highlighted further in the snapshot, etc.

Take a look Mike King’s SGE study on 91K queries with results patterns for a data based assessment too.

2. The unpredictability of Google Updates

I know the feeling. Many of us, even if we’re working hard on optimizing our (or our clients) sites, we’re afraid to get hit.

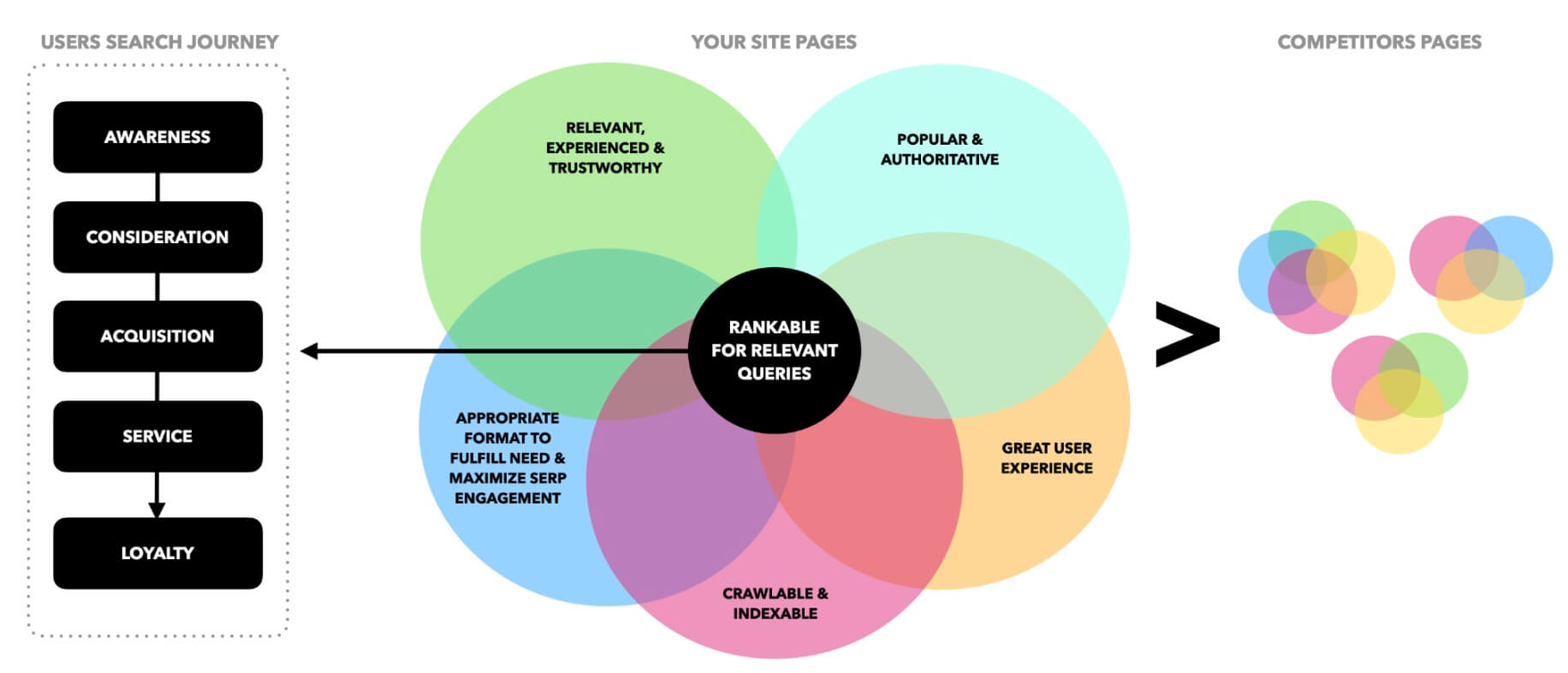

This is addressed by optimizing our site pages to become the best answer across all of our targeted users search journey, from awareness to loyalty, optimizing from our content crawlability, indexability, format, relevance, experience, trustworthiness, popularity and overall quality to be better and more satisfying than the one of our competitors.

This is much easier said than done due to the usual lack of SEO resources, time constraints, buy-in restrictions and long wait times to execute your SEO strategy tasks.

It’s also why is fundamental to prioritize those actions that matter the most, with the highest impact and lower efforts first, especially in a slow execution process. This will allow us not only to achieve your SEO goals faster, but be able to show positive trends to gain buy-in for all of the other activities in your roadmap. Here’s an SEO prioritization template I created to facilitate this task

But what happens if you’re hit “while” becoming the best in class answer for your targeted queries? We should first understand if we had been hit for queries that actually matter and require our action since they’re negatively impacting our SEO goals… and not just decreasing the rankings of a non-relevant term we don’t really target and is trivial for the business.

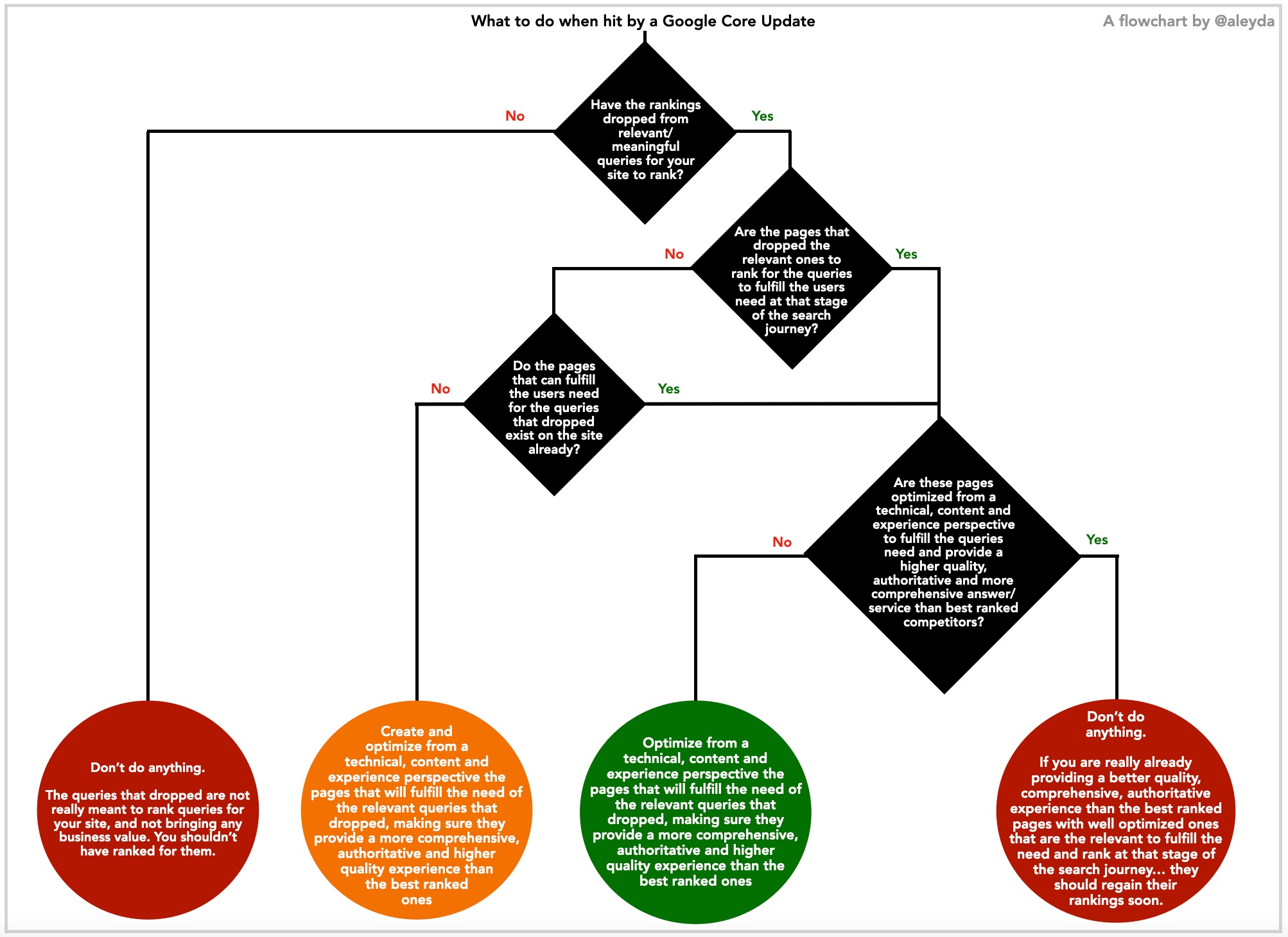

I created this flowchart for that purpose, which I hope helps to assess if you should actually do something or not:

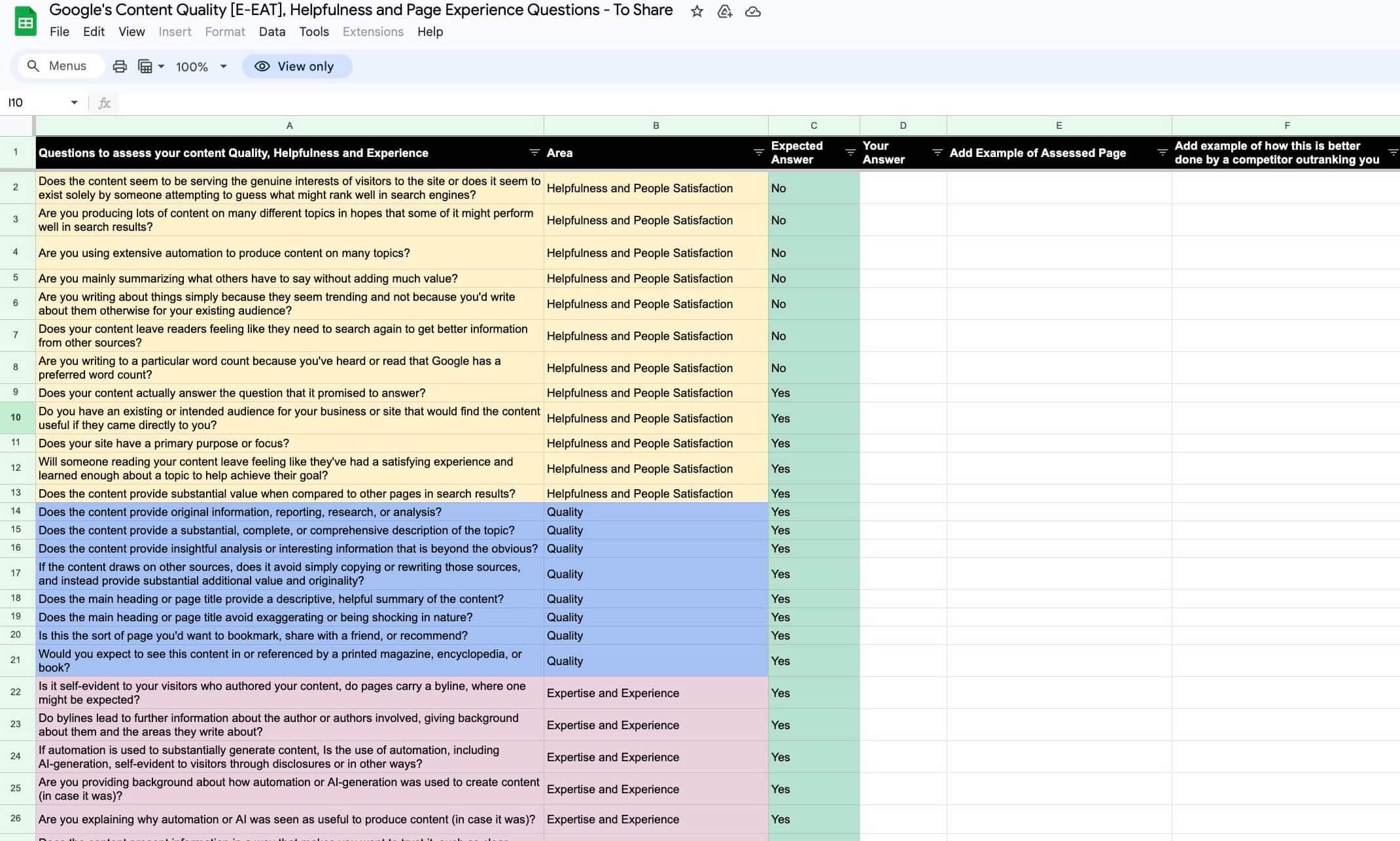

In the case you see you have been actually hit by a core update for relevant queries, do an assessment using Google’s content quality questions and optimize accordingly, for which I’ve created a checklist you can use here:

The more you advance, and tackle those major issues you see hurting (or in the risk to hurt) your key pages, the lower the risk will be.

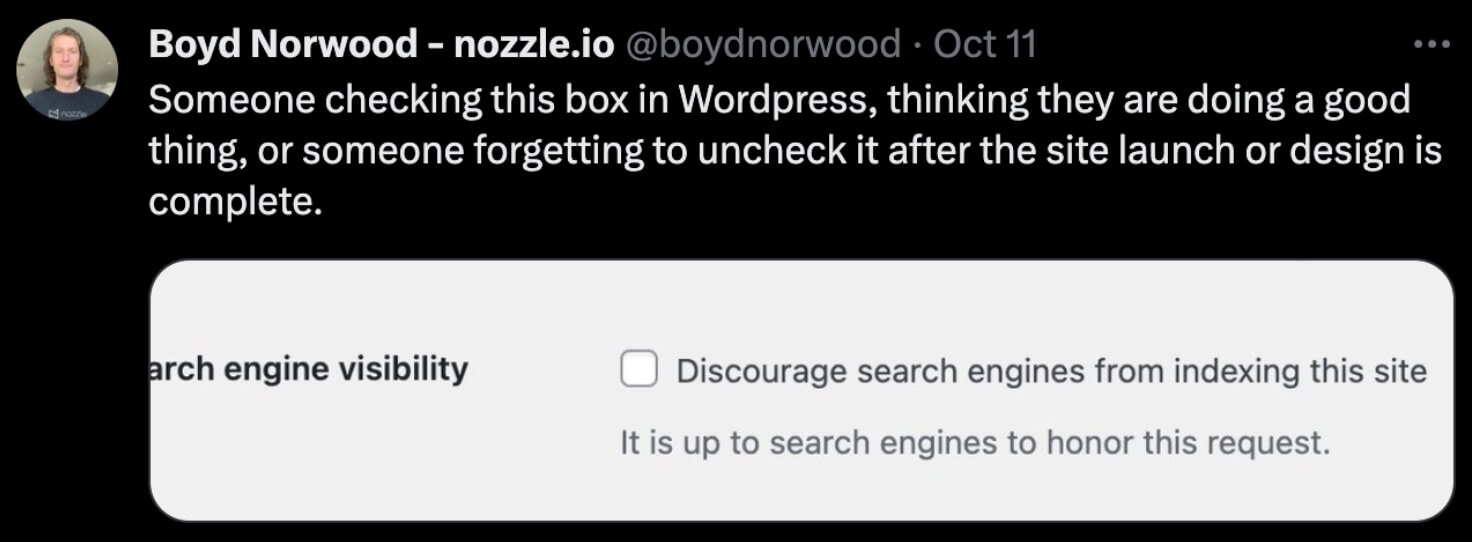

3. The overlook of configurations that can harm critical pages crawlability and indexability

It’s so easy to overlook a checkbox or a tag validation, that can end up noindexing the site. These are the type of recurrent technical issues that cannot only harm what you have achieve so far but also hold the overall SEO process execution back.

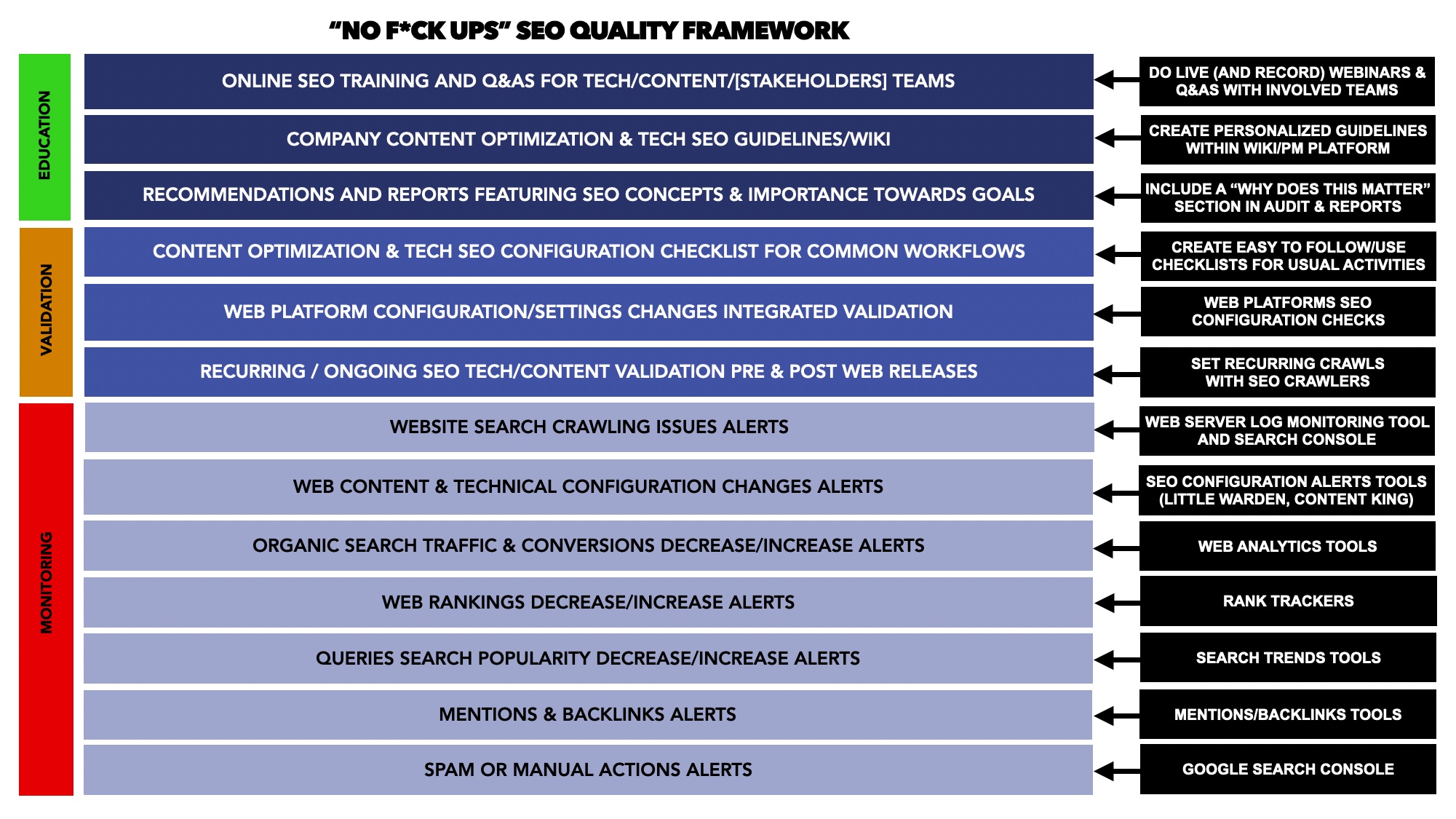

Rather than being reactive about it, you need to be proactive by setting an SEO quality framework through the whole SEO process, to minimize these bugs/mistakes with education and validation processes within the organization and different stakeholders, and whenever those minimal error instances actually happen are caught fast with a proactive monitoring system that you should also set too. This is a topic I’ve spoken about in the past, and you can see slides with tips, resources and tools here.

A few keys I would like to highlight to avoid those bad “SEO f*ck ups”:

- Education: Evangelize and agree on using SEO configuration checklists when developing any update or release with the relevant involved areas and team members. If they don’t use it, then it won’t matter, so is critical you get their support and they actually do integrate your checklists within their workflows.

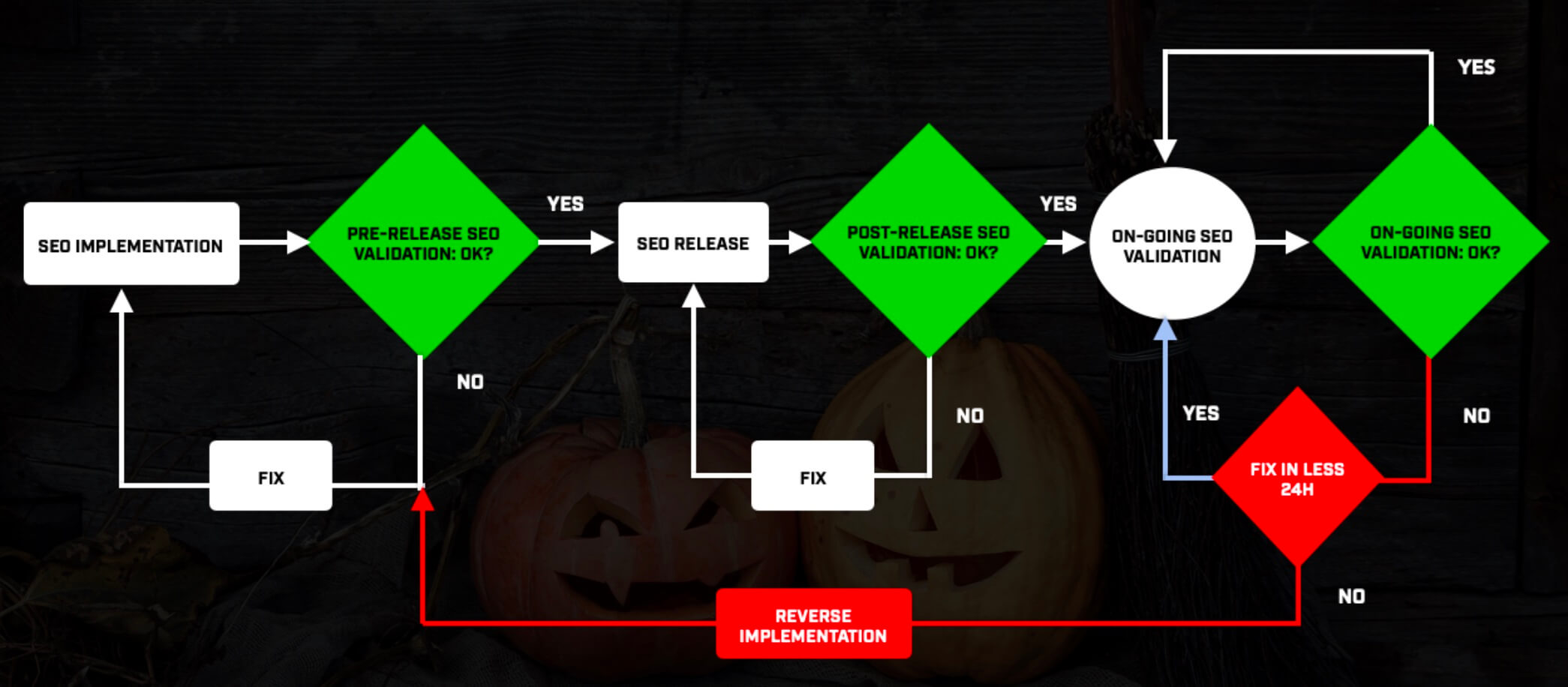

- Validation: Agree on a release validation workflow, before and after launching, taking “what-ifs” into account (eg. what happens if something is released with a bug that can harm your site crawlability? Is it reverted if it cannot be fixed in the first 24h hours?). Even if the release is considered trivial, it should be tested first in a stage environment (that actually replicates the production one and is non-crawlable).

- Monitoring: Continuously monitor your site meaningful SEO configuration across the different pages types to ensure their desired status. Use real-time SEO crawlers (such as ContentKing, LittleWarden or SEOradar) to be alerted of unwanted SEO changes fast, with warnings delivered to your email or PM system.

This is how you say goodbye to continuous SEO mistakes or bugs!

4. The lack of SEO validation of Web Migrations

Somehow in 2023 not all Websites incorporate SEO within Web Migrations from the start. This is a persistent issue along with all structural Web changes, from URLs to CMS migrations and redesigns, which can generate some of the worst SEO horror stories.

But it’s also not fair to only blame others, since it’s up to us to evangelize and influence about SEO importance, without waiting for a Web migration to happen in the first place! Here are a few tips to avoid these scenarios:

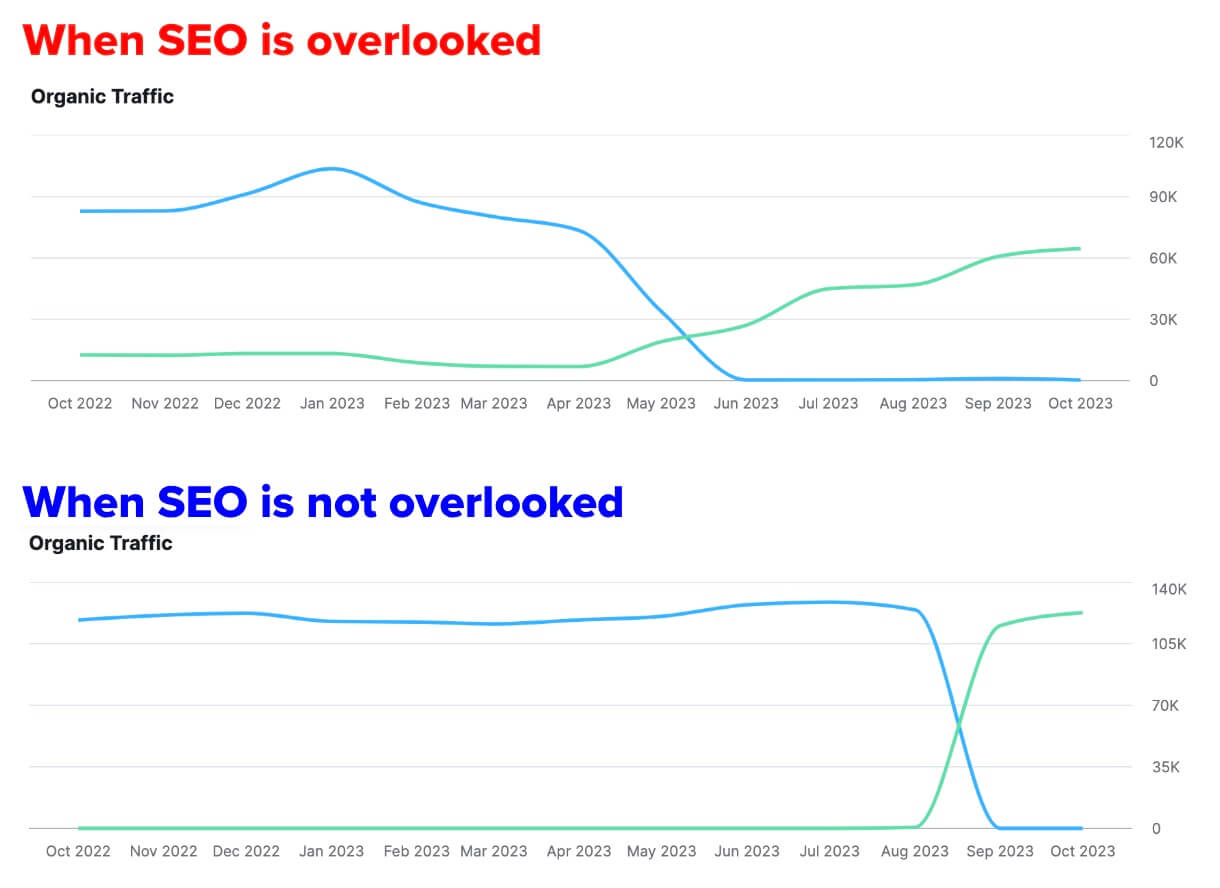

- Educate decision makers when starting with the SEO process about structural Web changes with examples (show what happens to site organic search traffic when SEO is not involved from the start of a Web migration), agreeing on getting informed and involved from the start.

- Use The Migration Archive from MJ Cachón to share with decision makers and stakeholders examples of bad outcomes when SEO is not involved.

- Share and go through a Web migration checklist (like this one I created) with the development team in an SEO Wiki, and agree to use it when necessary. This checklist should explain the why of each task, to understand their criticality, not only for SEO but for UX and conversion.

- When doing your recurrent Web crawls, always store your raw and rendered HTML to always have a full copy of your previous Web versions and check their old configurations and content if anything goes bad.

- Configure your real time Web crawling and alerts (using tools like ContentKing, LittleWarden or SEOradar) to be warned whenever your top URLs change type.

If despite all this a structural Web change is released without SEO validation, you need a radical prioritization to recover fast, don’t follow the full migration checklist:

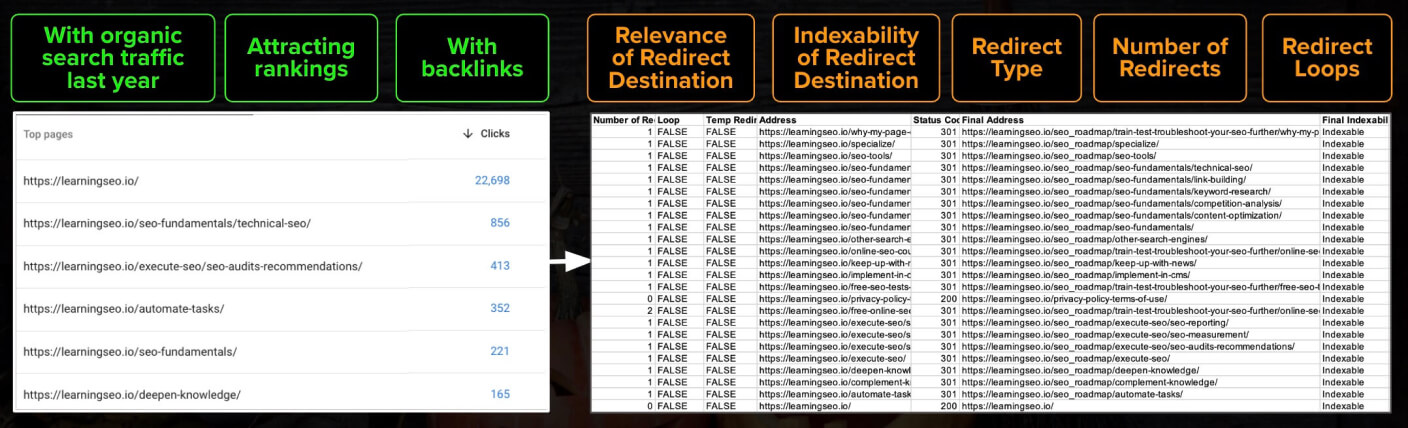

- Gather the top URLs to list crawl (with organic search traffic last year, attracting rankings, with backlinks) to identify their redirect status and assess optimization needs (relevance of redirect destination, indexability of redirect destination, redirect type, number of redirects, redirect loops).

- Remember to enable JS Rendering, to crawl across subdomains and follow redirects.

- Don’t forget to check the destination URLs configuration, besides redirect status & relevance.

- Check from crawlability to indexability and reliance on CSR JS for core areas of the new URLs.

- Spot and assess the raw vs rendered main content changes easily using Screaming Frog’s “View Source” report.

- Remember that the SEO devil is many times in the implementation details:

- Reviews structured data is still implemented but now reliant on lazy loaded JS, so can’t be indexed

- Second level navigation is still present but now reliant on onclick event to be JS rendered and is not crawlable

- Old images attracting a high share of backlinks are not being 301-redirected

- If you don’t have old URLs data, use tools like Semrush or Sistrix to get a list of former URLs and how their performance changed.

- If you still need to map the old to new URLs and is not possible to use a simple .htaccess rewrite rule, use ChatGPT with Advanced Data Analysis to help you.

- Once you’ve ensured the top pages 301-redirect and new URL versions core optimization, do a full new vs old crawl comparison. You can then do an assessment of additional configurations in new pages to execute based on impact and effort: From structured data to CWV status.

- Remember to split the identified SEO issues data and actions based on the different pages types to facilitate execution on those that matter.

- Monitor your old and new Web versions positive crawlability, indexability, rankings & clicks via the GSC & 3rd Party Tools.

- Remember to leverage GSC’s Crawl Stats report, especially if you don’t have access to log files.

- To facilitate visibility tracking across properties, blend GSC data in Looker Studio to check the evolution through an easy to access and share dashboard.

It’s in our hands to minimize the potential negative effects of our current SEO nightmares. I hope these tips help you to wake up from them 🙂 Happy SEO Halloween!